Better Openpilot Experience (in beta)

Better file management, nicer UI, video streaming, joystick control, and more for your openpilot device.

We have three components: Connect, Forks and API - use them together or pick what you need. You can try our upgraded Connect right now - works with stock openpilot, just log in with your Comma, Konik, or Asius account.

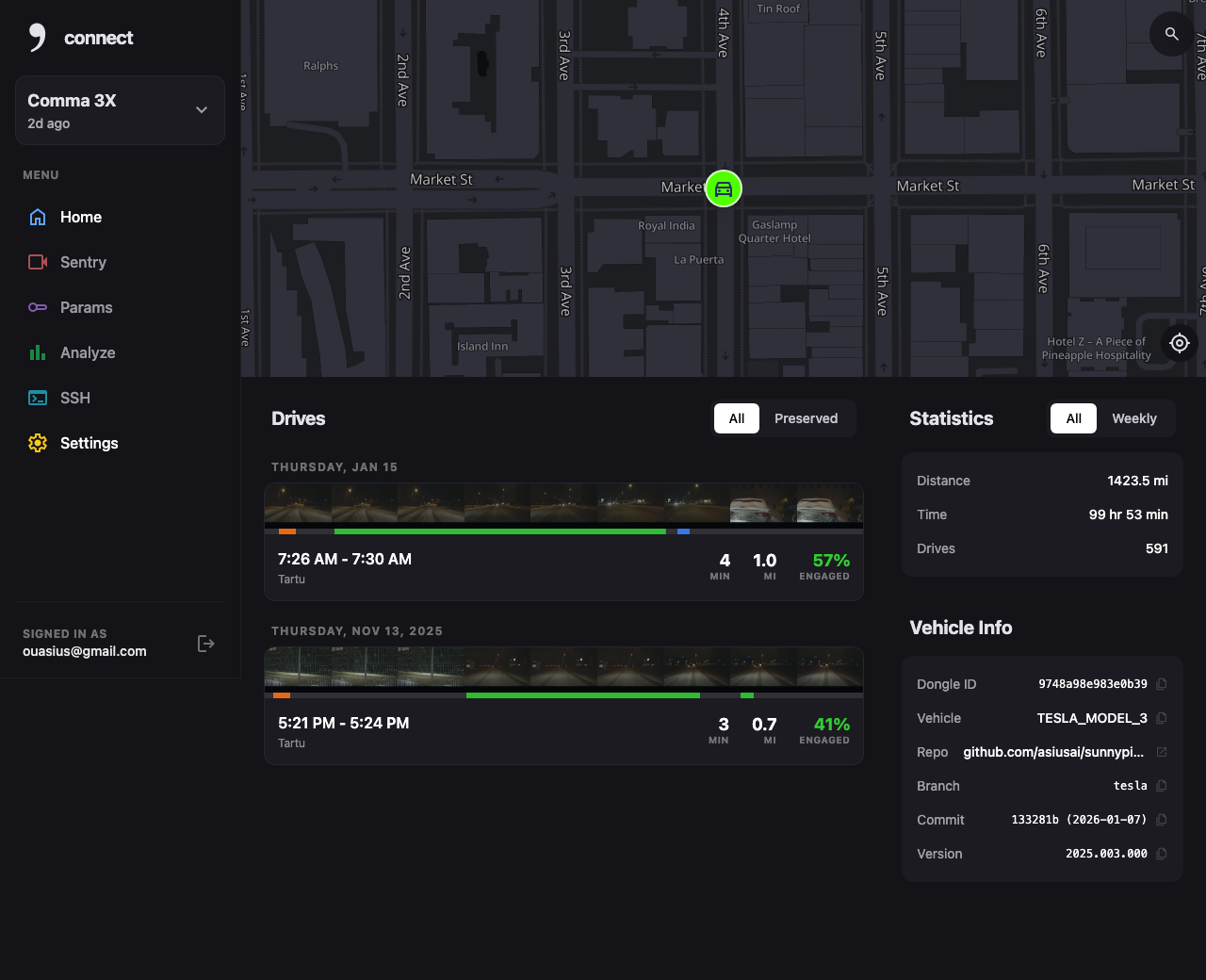

Asius connect

Our web frontend with better UX, high-res video player and rendering, file management, and more.

Works with stock openpilot - no modifications needed. Supports Comma, Konik, and Asius backends - just pick your provider at login.

AsiusPilot

Modified openpilot with remote streaming, joystick control, and device params editing.

Reset your device and then input installer.asius.ai

Asius API (in alpha)

Cloud backend with 1TB storage, fast video playback, and no device blocking.

After installing our fork, enable “Asius API” from the device settings, then use Asius Connect going forward.

Feature Comparison

| Feature | Stock | Asius Connect | AsiusPilot | Asius API |

|---|---|---|---|---|

| Low-Res video playback | ✅ | ✅ | ✅ | ✅ |

| High-Res video playback | ❌ | ✅ | ✅ | ✅ |

| High-Res video/segment mp4 download | ❌ | ✅ | ✅ | ✅ |

| Speed and OP UI in video player | ❌ | ✅ | ✅ | ✅ |

| File management | 🟡 (basic) | ✅ | ✅ | ✅ |

| Online video rendering | ❌ | ✅ | ✅ | ✅ |

| Detailed route info | ❌ | ✅ | ✅ | ✅ |

| Share route with signature | ❌ | ✅ | ✅ | ✅ |

| Progressive Web App | ❌ | ✅ | ✅ | ✅ |

| Quick action buttons | ❌ | ✅ | ✅ | ✅ |

| - | ||||

| Remote snapshot | ✅ | ✅ | ✅ | ✅ |

| Remote SSH | 🟡 (prime only) | ✅ | ✅ | ✅ |

| Remote SSH from browser | ❌ | ✅ | ✅ | ✅ |

| Remote video streaming | ❌ | ❌ | ✅ | ✅ |

| Remote joystick control | ❌ | ❌ | ✅ | ✅ |

| Remote device params editing | ❌ | ❌ | ✅ | ✅ |

| Lane turn desires | ❌ | ❌ | ✅ | ✅ |

| Bluetooth support | ❌ | ❌ | ✅ | ✅ |

| - | ||||

| Fast High-Res video playback | ❌ | ❌ | ❌ | ✅ |

| Fast High-Res video rendering | ❌ | ❌ | ❌ | ✅ |

| 1TB storage per device | ❌ | ❌ | ❌ | ✅ |

| Use any device | ❌ | ❌ | ❌ | ✅ |

| No device blocking | ❌ | ❌ | ❌ | ✅ |